…and improving on HLS latency and our ability to scale in the process

Background

For over a decade we have been operating a suite of virtual sports games on our website with the ability to watch the event via a video stream. These streams have always been encoded and packaged for distribution to the client player by systems and appliances ran ‘on premise’ within bet365’s data centres.

Throughout this time the products and solutions in place have evolved in accordance with the technologies behind live and on-demand streaming across the internet and the client players within the browser (we have come a long way since embedding the Windows Media Player control in HTML was the de facto standard!).

What had stayed consistent in the original design though was the SDI (Serial Digital Interface) standard for transmitting video and audio content across our internal network.

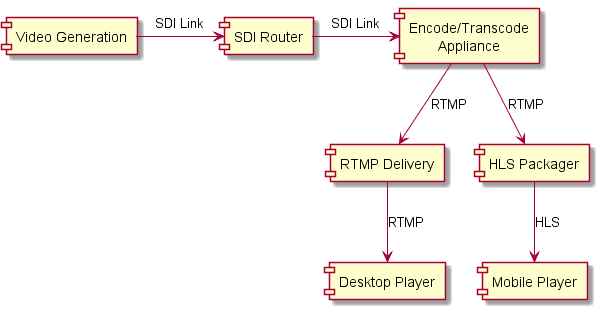

The diagram below shows how this worked at a high level. The video and audio content generated at source transmits the data over the SDI cable into an SDI router. The SDI router then forwards this on according to its configuration to an encoder. As this is not on the LAN it requires that the physical boxes are in close proximity so that the cable can be connected to the SDI ports. The Encoder Appliance is then encoding the video and audio, transcoding into multi-bitrates and sending this on for delivery using RTMP Push.

Problems Faced

With the growth of the product and the introduction of these streams to the mobile player using HLS (HTTP Live Streaming) we found that the amount of physical kit required was consuming too much rack space in our datacentres.

The maintenance and support of this platform also required specialist skills and faults were hard to diagnose. Upgrades were also problematic and SDI Cards in the Video generation servers were expensive and tied us to specific hardware and operating systems.

The SDI approach was our biggest problem when it came to growing our product due to the one to one relationship between two physical pieces of hardware. The SDI Router gave us some flexibility but more for resilience than the ability to easily add more sports. In addition, the Video generation at source could only host one Virtual Sports product. With 11K events streamed daily across 13 virtual sports, 11 for our Italy offering and support for business continuity we had a total of 48 physical servers to host the video generation and 24 encoding appliances (2 sports per encoder).

We also faced a new problem in that browsers such as Google Chrome were implementing HTML5 by Default and users would be asked to allow all flash content to be run. Adobe is now planning to remove support for Flash altogether by the end of 2020 and browser support will be phased out ahead of this date. As Flash offers the best low-latency live streaming format in the form of RTMP Live Streams we now have latency concerns.

With a packed roadmap of new virtual sports to add to the portfolio these had to be put on hold due to the current solution not being agile enough to allow us to scale. So in 2017 we began looking at an alternative solution which would solve all of these issues.

The R & D

We put some time into researching and learning more about the world of video streaming and how other companies operate live streams across the internet. We found the setup we already had in place was relatively common in that Encoding Appliances were used to transcode and package video and audio content for a range of different media platforms either ‘on premise’ or ‘in the cloud’.

From speaking with numerous providers of streaming solutions we found a common sales pitch which was focused around the User Interface for setting up and managing the encoding configuration and monitoring systems. What we were more interested in was the technical aspects of how the software encoded and transcoded the streams. From here we wanted to know what lower level settings we could tweak in order to get the lowest latency. Unfortunately we found that the ability to do this was limited with the products we trialled.

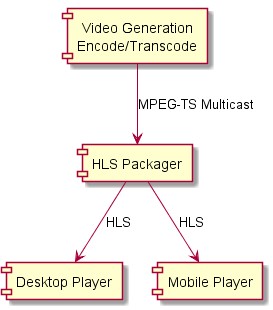

We had learned however that a common alternative to SDI for transmitting the content was MPEG-TS and Multicast. Whilst most 3rd party solutions supported this we had come to realise that this is something we could do ourselves at source, thus removing another layer in the architecture completely.

MPEG-TS And Multicast

Working in conjunction with our Virtual Sports provider we agreed to work on a POC for producing MPEG transport streams (MPEG-TS) at source which could be distributed on our network using Multicast.

With MPEG-TS you can package multiple video and audio streams in a single container. This was perfect for us as we require video streams at multiple bitrates for Adaptive Bitrate Streaming (ABR) and Audio content for commentary in multiple languages.

Multicast is a technique for one to many communication over an IP network therefore this solved the one to one mapping problem with SDI. We could just pick up the MPEG-TS container off the network from any machine providing it was on the same VLAN.

So we had a much simpler solution with reduced tiers in the Architecture thus reducing the footprint of our Virtual Sports Platform:

FFMPEG And NGINX

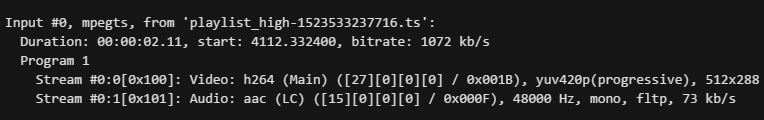

To achieve this we used ffmpeg to encode the Video as H.264, transcode into multiple bitrates, encode the multiple audio channels and output as an MPEG-TS container on multicast address space. Yes, ffmpeg is capable of doing lots of things, and its open sourced!

To package this for delivery to the client as HLS we used NGINX coupled with ffmpeg configured to grab the required MPEG-TS stream of the network and segment into 2 second chunks, each chunk containing one video and one audio file. This was repeated for each bitrate and audio language combination.

To bring this all together a manifest file is created (.m3u8) and all is served from the NGINX webserver to the client over HTTP.

Conclusion

The POC was successful as it met the following success criteria:

- Reduced hardware and footprint in our data centres

- Ability to add more sports easily

- A simplified solution making fault diagnosis and our ability to recover quicker

- Specialist skillsets were now more transferable into the development teams

- Reduced latency of our HLS streams

- Reduced costs

This solution has been successfully rolled out across parts of our production estate and we are seeing the benefits listed above.